Drone, Inc. - SOFTWARE: Algorithms 101

“We’re going to find ourselves in the not too distant future swimming in sensors and drowning in data,” Lt. Gen. David Deptula, Air Force deputy chief of staff for intelligence, surveillance and reconnaissance (ISR), told a 2010 conference.85

That prediction may already have been overtaken by events. In a 2008 study of sensor-collected intelligence, two years before Deptula’s assessment, the Defense Science Board concluded: “Large staffs, often numbering in the thousands, are required in theater to accept and organize data that are broadcast in a bulk distribution manner. These analysts spend much of their time inefficiently sorting through this volume of information to find the small subset that they believe is relevant to the commander’s needs rather than interpreting and exploiting the data selected on current needs to create useful information.”86

To sort through this haystack, the military has turned to algorithms. An algorithm is basically any sequence of actions used to solve a problem or to complete a task to make complex and repetitive tasks easier. Algorithms are typically applied to computer problems or tasks, but are widely used in many aspects of our daily lives today.

“In areas ranging from banking and employment to housing and insurance, algorithms may well be kingmakers, deciding who gets hired or fired, who gets a raise and who is demoted, who gets a 5 percent or 15 percent interest rate,” writes Frank Pasquale, a law professor and the author of the book, The Black Box Society. “People need to be able to understand how they work, or don’t work. The data used may be inaccurate or inappropriate. Algorithmic modeling or analysis may be biased or incompetent.”87

In the world of drones, multiple algorithms have been unobtrusively embedded in the sensors as well as in the targeting technologies. Ultimately, these algorithms can help decide who lives and who dies.

Most algorithms are fairly generic. The accuracy of the results they produce depends on a variety of factors beginning with the quality of the data they receive, the assumptions behind the data, how the data is weighed, and the “learning model” by which they are trained to filter out wrong answers.

While algorithms can identify patterns from a sea of data, the more complex or dirty the data is, the more rules and data “attributes” an algorithm needs to accomplish a task. A simple example might be to show a computer multiple images of trees and tanks, and then ask it to count the number of tanks and trees in a new photograph. Likewise, one might ask a computer to identify “men with guns” after feeding it pictures of guns.

Just like humans, algorithms improve when they are taught well and get lots of practice. Thus an algorithm that has been trained on 100 different pictures of guns will probably be more accurate than an algorithm that has studied only ten.

The accuracy of an algorithm can be measured by looking at the false-positive rate (the number of times it mistakenly counts men without guns as men with guns) and the false-negative rate (the number of times it counts men with guns as men without guns). While an algorithm can be trained to improve its false-positive and false-negative rates, there will always be circumstances that can trip it up, such as a target who wraps a blanket around his guns, or someone holding firewood.

Here, to set the stage before we delve into the specific technologies and their drawbacks, are four examples of key algorithms used by the Pentagon in the drone war. The first two are motion planning algorithms: the Kalman Filter and the Interacting Multiple Model filter; the third is Random Decision Forests, a learning algorithm; and finally, Greedy Fragile, a targeting algorithm.

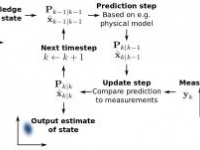

Take the Kalman filter. It was first invented in 1960 to predict variables in a system that is continuously changing, such as a flying plane. The algorithm estimates the current state of the system based on data from previous points in time. It also estimates the uncertainty in the system and seeks to filter out “noise” or useless information as well as random or inaccurate information, by giving them lower weight or importance in the final estimates. The most famous use of the Kalman filter was to predict the real time path of the Apollo space missions. Now it is widely used across a range of disciplines.88

In 1988, two researchers invented a way to combine data from multiple sources, each of which could be changing at the same time. They named this new algorithm the Interacting Multiple Model (IMM) because it allowed for multiple different Kalman filters to be checked against each other for weight or importance.

IMM is used for predicting aircraft traffic as well as target tracking by drones using radar, video, and geolocation data. Today Henk Blom, one of the inventors of IMM, is professor of Air Traffic Management Safety at the University of Delft in the Netherlands. Yaakov Bar-Shalom, the other inventor, has taught classes at the Pentagon and published papers including “Multi-Sensor Multi-Target Tracking” and “Target Tracking and Data Fusion: How to Get the Most Out of Your Sensors.”89

In addition to tracking aircraft and drones, the Kalman and IMM algorithms are used to detect other moving targets like animals and people with lesser success, since living things are much less predictable.

Another key use of algorithms in the world of surveillance is to identify potential “terrorists” from big databases of information. To do this, according to documents released by whistleblower Edward Snowden, the NSA has used the common algorithmic learning tool, Random Decision Forests.90 It allows a programmer to create smaller bundles of different data combinations and set up “decision trees” of yes and no answers.

“Having created all those trees, you then bring them together to create your metaphorical forest,” writes Martin Robbins in the Guardian newspaper.91 “You run every single tree on each record, and combine the results from all of them. Very broadly speaking, the more the trees agree, the higher the probability is.”

Our fourth example is Greedy Fragile, a targeting algorithm compiled in 2012 by Paulo Shakarian at the U.S. Military Academy at West Point. Shakarian wrote 30 lines of code that he claimed would help break up networks by attacking mid-level participants to make them more fragile.

“I remember these special forces guys used to brag about … targeting leaders. And I thought, ‘Oh yeah, targeting leaders of a decentralized organization. Real helpful,’“ Shakarian told Wired magazine. Zarqawi’s group, for instance, only grew more lethal after his death. “So I thought: Maybe we shouldn’t be so interested in individual leaders, but in how whole organizations regenerate their leadership.”92

There’s no public record that Greedy Fragile has been used for the drone program, but it is one example of military efforts to mathematically calculate whom to kill. Shakarian has created similar algorithms, like Spatio-Cultural Abductive Reasoning Engine (SCARE), for the military to help it track down roadside bombs in Iraq.93

< Previous • Report Index • Download Report • FAQ/Press Materials • Watch Video • Next >